Ollama with OpenWebUI

About

Ollama with OpenWebUI offers a seamless way to access powerful local AI language models through an intuitive web interface. Ollama enables you to run advanced LLMs directly on your computer, keeping your data private and reducing latency by avoiding cloud-based services. OpenWebUI complements this by providing a user-friendly browser interface to interact with these models effortlessly.

This integration allows users to generate text, brainstorm ideas, create content, and even assist with coding or research—all while maintaining full control over their AI environment. Whether you’re a developer, writer, or researcher, combining Ollama’s robust local execution with OpenWebUI’s accessible interface gives you the best of both worlds: privacy and convenience.

Getting started is simple: install Ollama, download your preferred models, set up OpenWebUI, and connect the two. From there, you can customize prompts, control generation settings, and produce high-quality AI-generated text—all from your web browser.

- Type virtual machines in the search.

- Under Services, select Virtual machines.

- In the Virtual machines page, select Add. The Create a virtual machine page opens.

- In the Basics tab, under Project details, make sure the correct subscription is selected and then choose to Create new resource group. Type myResourceGroup for the name.*.

- Under Instance details, type myVM for the Virtual machine name, choose East US for your Region, and choose Ubuntu 18.04 LTS for your Image. Leave the other defaults.

- Under Administrator account, select SSH public key, type your user name, then paste in your public key. Remove any leading or trailing white space in your public key.

- Under Inbound port rules > Public inbound ports, choose Allow selected ports and then select SSH (22) and HTTP (80) from the drop-down.

- Leave the remaining defaults and then select the Review + create button at the bottom of the page.

- On the Create a virtual machine page, you can see the details about the VM you are about to create. When you are ready, select Create.

It will take a few minutes for your VM to be deployed. When the deployment is finished, move on to the next section.

Connect to virtual machine

Create an SSH connection with the VM.

- Select the Connect button on the overview page for your VM.

- In the Connect to virtual machine page, keep the default options to connect by IP address over port 22. In Login using VM local account a connection command is shown. Select the button to copy the command. The following example shows what the SSH connection command looks like:

ssh azureuser@<ip>

- Using the same bash shell you used to create your SSH key pair (you can reopen the Cloud Shell by selecting >_ again or going to https://shell.azure.com/bash), paste the SSH connection command into the shell to create an SSH session.

Getting Started with Ollama and Open WebUI

After successfully connecting via SSH, you’re ready to set up Ollama with Open WebUI. Here’s how to get everything running:

Key Components:

- Ollama runs locally on port 11434

- Open WebUI operates as a Docker container using port 3000

Step 1: Installing Ollama LLM Models

Once logged in, you’ll need to install your preferred language models. For instance, to install Meta’s powerful Llama 3 model (currently one of the most advanced open-source LLMs), execute:

$ ollama run llama3

Quick Tips:

- To exit the LLM interface and return to your terminal, press Ctrl + D

- View your installed models anytime with:

$ ollama list

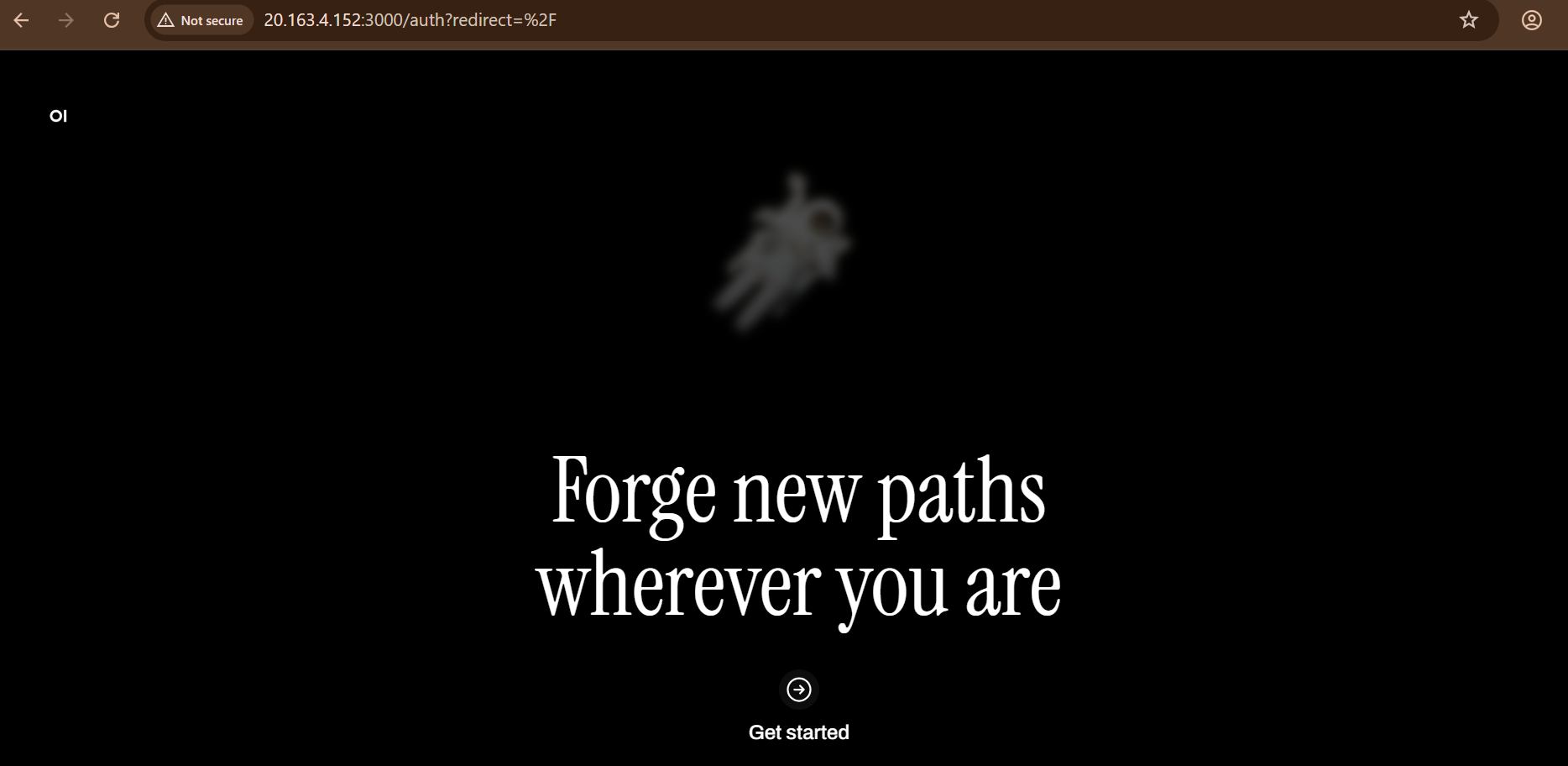

Step 2: Accessing Open WebUI

Open WebUI runs in a Docker container. To verify its status:

$ sudo docker ps

Note: The container might need a few minutes to initialize completely.

After installing your LLMs, access the WebUI interface at:

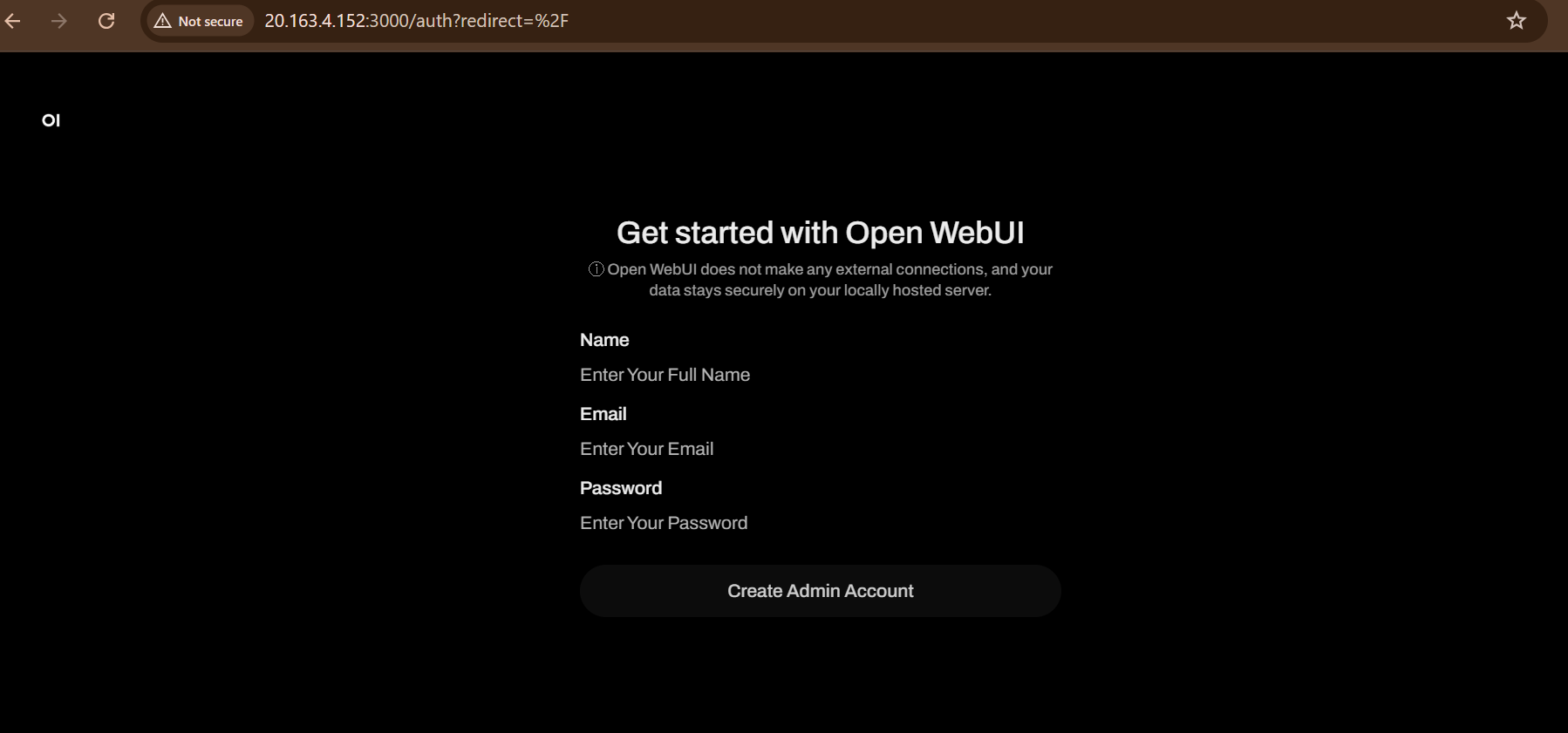

First-Time Setup:

- On the login page, click “Sign Up” to create your credentials

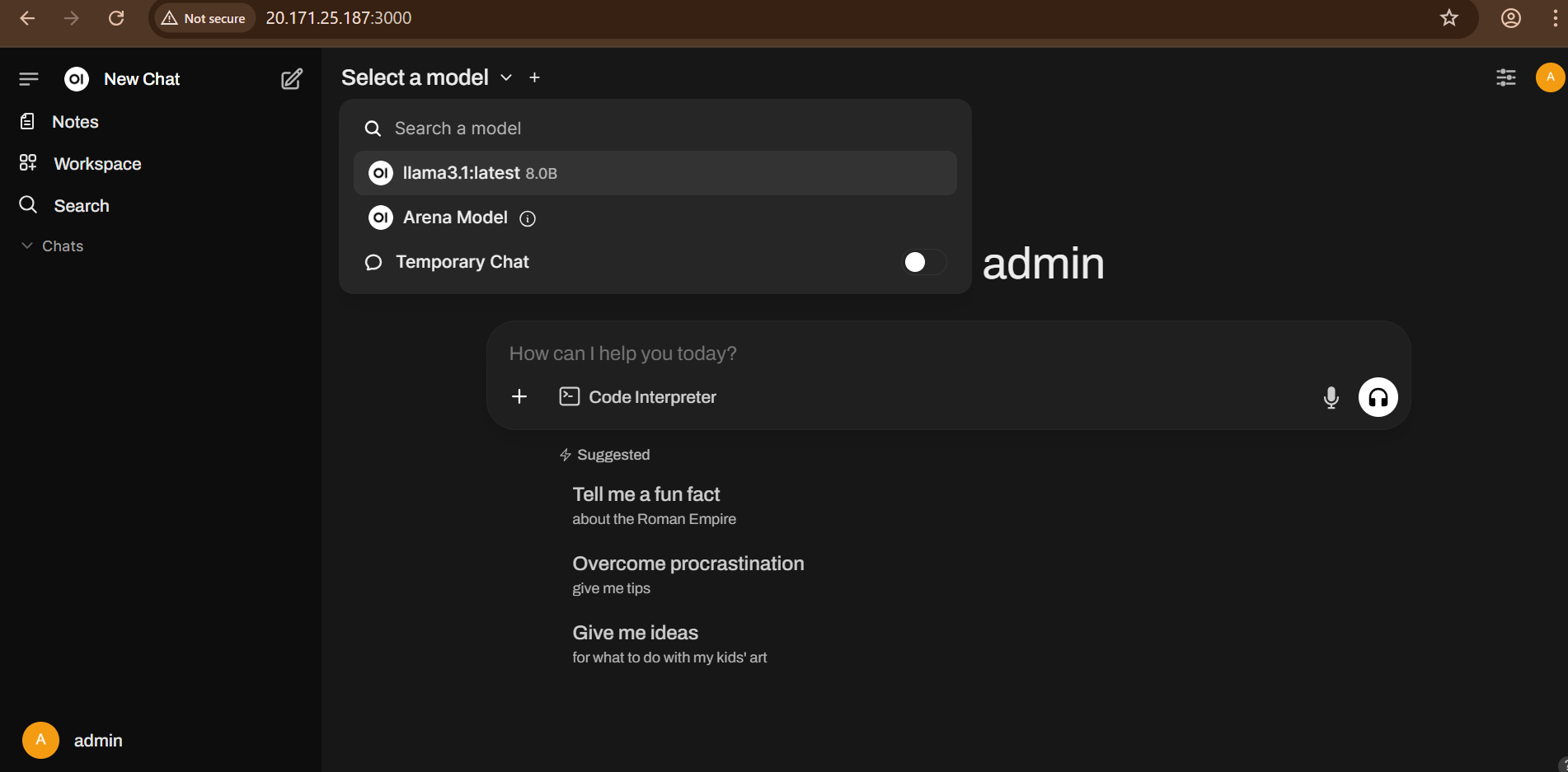

- Once logged in, select your preferred model from the dropdown menu

Performance depends on your VM’s specifications – consider upgrading your VM for better response times if needed.

Port Reference:

- Ollama: TCP 11434 (Accessible at http://127.0.0.1:11434)

- Open WebUI: TCP 3000

For Azure firewall configuration, consult the Azure Network Security Groups documentation.

- (510) 298-5936

Submit Your Request

Highlights

- Local Model Execution: Run powerful AI language models directly on your own hardware for maximum privacy and control.

- Privacy-Focused: Keep your data and prompts secure by avoiding cloud-based AI services.

- Multi-Model Support: Easily switch between different language models managed by Ollama within the same interface.

- Customizable Prompts: Tailor prompt templates and generation parameters like temperature, max tokens, and top-p for personalized output.

- Open-Source UI: Benefit from a continuously improving community-driven web interface with frequent updates.